October 14, 2025

Cooling the Cloud: Innovation at the Heart of Data Centers

Season 5 Episode 9: As AI drives growth in data centers, climate tech is helping build a more sustainable digital future.

The digital world is expanding at lightning speed, powered by vast data centers that have become the critical infrastructure of our time. From streaming services to AI breakthroughs, these facilities are the engines of growth and innovation. And with that power come important challenges: massive energy use, complex cooling demands, and the need to consider sustainability in an industry in continuous evolution.

In this episode, we hear from Scott Smith, Director of Mission Critical Offerings at Trane Technologies, and Dr. Dereje Agonafer, Presidential Distinguished Professor of Mechanical and Aerospace Engineering at the University of Texas at Arlington. Together, they unpack the scale of the data center boom, explore the cutting-edge cooling technologies reshaping the industry, and share why collaboration between industry and academia is essential to building a more energy efficient data center model and a more sustainable digital future.

- The digital world is expanding at lightning speed. Every AI tool, every search, every streaming service depends on vast data centers working behind the scenes. These facilities have become the power plants of the information age, but with that power comes an enormous challenge.

- The opportunities for liquid cooling is just now growing exponentially, right? I think it's important to keep in mind is the technology we're utilizing, is this sustainable?

- It's a race unlike anything that we've seen before. Data centers are helping us crunch data, amplify growth and fuel research and innovation. But the technology's moving so fast that operators are asking, will the facilities that we built today even be relevant in two years? That's why innovation and cooling, smarter, more efficient, more sustainable, it's not just a nice to have, it's the only way forward.

- You're listening to "Healthy Spaces", the podcast where experts and disruptors explore how climate, technology and innovation are transforming the spaces where we live, work, learn and play. I'm Dominique Silva, Marketing Leader at Trane Technologies.

- And I'm Scott Tew, Sustainability Leader at Trane Technologies, and on season five of "Healthy Spaces", we're bringing you a fresh batch of uplifting stories featuring inspiring people who are overcoming challenges that drive positive change across multiple industries. We'll explore how technology and AI can drive business growth and help the planet breathe a little bit easier.

- Hey, Scott. Welcome back.

- Hello. I'm glad to be back. You know, I miss hanging out with the "Healthy Spaces" team, but I loved hearing about AI, especially the focus on BrainBox AI.

- Yeah, it was super interesting, and today we're kind of staying in the same area, Scott, because this massive boom in AI has caused a huge growth in another area, data centers.

- I hear so much about data centers in my world of sustainability. I still can't get over the size of these things. Some of them are buildings the size of football fields, they're filled--

- Right.

- Wall to wall, floor to ceiling with servers, all of them generating a massive amount of heat.

- Yep. And now with the rise of AI data centers, the need for energy efficiency is increasing even more. And that's actually raising the bar for thermal management systems as well. It's not enough anymore to just keep the servers from overheating. Companies are having to rethink how the entire system runs.

- Hmm. So speaking of rethinking things, who's going to help break this down for us?

- All right, so our first guest today is Scott Smith, who is, get this word title, the Director of Mission Critical Offerings at Trane. I feel like he should have a movie, right? Like just for himself. So Scott works directly with data center operators, and so he's seeing really up close how this boom is reshaping the industry.

- All right, I'm looking forward to this.

- We started, you go back to the original cloud searches and, you know, searching for things on the internet. Those are actually relatively simple transactions, if you will. Compare that to the, what we're seeing today with AI, incredibly complex, just in terms of the computational power that's required. So as you think about it, that growth, that migration to more and more complex transactions, that's really what has spawned this. You think about AI six, nine months ago, AI, nobody used AI. Very few people did. Now you and I, we probably use it every single day.

- That's right.

- You do a search and before you know it, it's actually coming back with an AI response. Every one of those requires computational horsepower. That computational horsepower is taking place inside of a data center. And so, you know, you have these massive data centers, huge number of racks containing either GPUs or CPUs, again, doing that computational horsepower. That's really what's driving all of this.

- From your experience in working with these clients, right? Every single day, what do you think keeps data center operators awake at night with an industry that is changing so fast?

- Yeah. Dominique, there's two things that I hear about all the time. One is, it's a rate at which they can develop new capacity. It used to be a race of how could they get the equipment? Now it's a race to how quickly can they bring them online? And so you've got a lot of folks looking at ways to, Hey, how can we pretest, pre-commission the units, our cooling units before they arrive on site? Again, it's all to bring the site up from, you know, bare dirt to an operational data center as fast as possible. And the other one I think that keeps people up at night is how long is the design gonna be valid for? You know?

- Right.

- Again, this technology is changing very quickly. Are they building a data center that in 18 or 24 months might be somewhat obsolete?

- So you were talking about these data centers, they're housing all of this. You called it computational horsepower, which I love. 'cause I just imagine these like thousands of horses, right? So they're generating all of this heat, which we need to remove, right? What role does the cooling equipment play in keeping these data centers functioning?

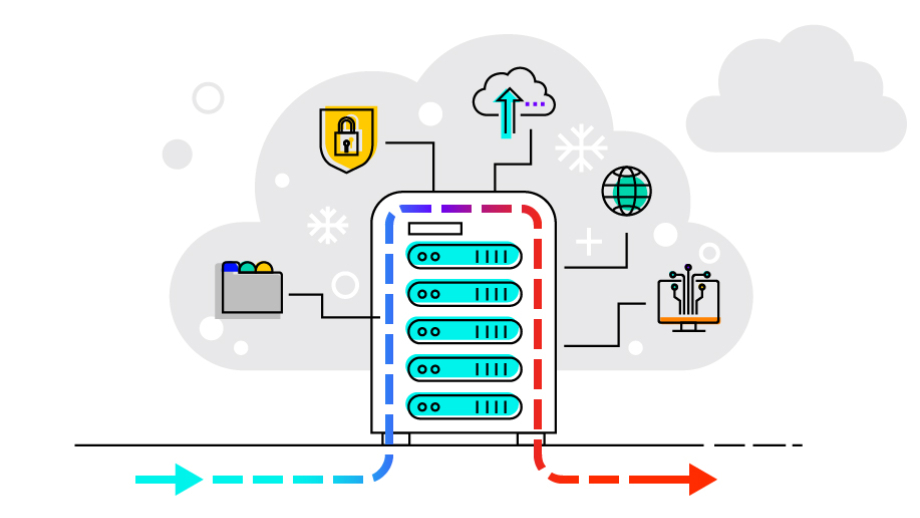

- So at the base of these are these chips, and these chips, again, they're doing the computations. They're generating heat. Okay. That heat has to get away from the chip or else the chip will overheat and eventually it'll just shut down. So that heat can be removed a couple of different ways. The traditional way was through air, through convection. And so what would happen is that air would get blown across the chip. That hot air would get removed out of the data hall through some form of heat rejection. It would get removed out of the data hall. What's happened though, recently, as the densities have increased, we're starting to see a lot more customers migrate over to what's called liquid cooling or direct to chip. And that's where, again, the chip directly sink to a cold plate. So instead of convection cooling, you now have conduction. And so that coolant, that fluid removes the heat away from the chip and eventually out of the data hall.

- Are there ways that we can continue to cool down data centers, but in a more efficient and sustainable way?

- There's a couple of things I'll just highlight here. The first is taking an existing center, an existing design, and how can they simply operate it more efficiently? Okay. And that's really trimming, you know, that two, three, 4% kind of numbers, whether that be through thermal storage, whether that, you know, demand shaving. There's several ways that it can be done, but I'll tell you, that's not transformational. Some transformational stuff that's taking place. You've got people looking at nuclear, small nuclear reactors. Onsite generation of power is happening. People are actively exploring that. The other thing that people are doing, you see a lot of this out west, is this idea of tapping into natural gas. We got huge natural gas basins. And so the question is how difficult would it be for a large data center to get natural gas out of the ground into a turbine creating power off the grid? So, tremendous opportunities again, but they've gotta find a way to create their own power.

- When I started studying a little bit the data center industry, one thing that I noticed is that many data centers were actually located in the north of Europe. And one thing that the north of Europe has in common is the climate, right? It tends to be a little bit cooler. And so I started hearing a lot about this concept called free cooling for data centers. Can you explain to me? 'Cause in my mind, nothing comes from free, right? I'm Portuguese. So can you explain to our listeners, what does this free cooling exactly mean?

- So free cooling, it's a relatively simple technique, but instead of using the vapor compression cycle using a refrigerant, a compressor, all of these things that consume a lot of power, the concept of free cooling is just this. You have coils and you have a fan. Essentially what it does is it the fan will suck the air across the coil and exit whatever free cooling design you might have. It works very well in low temperature or low ambient conditions, as your example in Northern Europe. Doesn't work nearly as well as you get into southern Europe or less southern United States, which are even hotter.

- Let me turn it around, Scott, because we've been talking a lot about heat. You know, we've kind of been talking about the traditional format, which is reject heat, and typically when we reject heat, we're either sending it to the air or into the lake, you know, whatever that is. But, I mean, heat, right? Heat is energy. I mean, couldn't we be using that energy for something else?

- The concept of heat recovery is not new. We've been doing various forms of heat recovery within Trane for years, both on air cooled chillers and, or water cooled chillers. The challenge is, what do you do with that? That recovered heat? That energy doesn't travel very well. Who's gonna need that heat or that energy? Again, long distances, very difficult. As you get closer and closer and you start building up around data centers, this opportunity for heat recovery I think is gonna become more and more obvious. The other thing that's happening, and you've already seen it in Europe, I suspect the North American regulatory groups will follow, is it will be become law. There will be a need to recover that wasted heat, that wasted energy.

- Right.

- Exactly what's done with it, yet to be seen. But we have seen some of our larger customers start to inquire about our capabilities in this space.

- Right. And I guess again, right? Comparing Europe to North America, Europe is a lot more dense. And so in a lot of cases, as we see a boom in data centers, they are having to be built, right? Close to communities, close to cities. So that thing that you mentioned there about, you know, we've got all this heat, but who's going to take it? It makes a lot of sense, especially in many countries in Europe where you already have established district heating networks. You can now use the data centers right? To feed into those districts heating networks rather than turning on your hot water plants. So it becomes really interesting, not just from an economical perspective, not just from an environmental perspective, but it even changes a little bit the perception, right? That we as society have of data centers, right? These big massive things that are just destroying our ecosystem, they can actually have a net positive value, right? You probably understood, I love talking about heat recovery, right? And it's just because as the mechanical engineer that I am, whenever people talk about rejecting heat, I always answer, ask the question, "Where is that heat going, and where could it be going instead?" So Scott, you have been in the cooling industry for quite a long time. You've been in the data center industry for five years, which today is kind of equivalent to 50 at the pace that things have been going. In five years time what do you think this is gonna look like? Where is the cooling technology heading? What do you see coming in the horizon?

- So this is kind of an interesting question, right? You kind of go back to Moore's law and the rate at which things are progressing, it's only speeding up. All of the chip makers, they're talking about development cycles getting faster and faster. And so what I suspect is gonna happen is the cooling technologies are gonna have to also develop faster and faster. Direct to chip or liquid cooling is relatively new. I would say it's come on in the last 18, 24 months. What we're seeing on the horizon is what we call two-phase direct to chip. Instead of using water or some variation of water, you actually use refrigerant, and it's an even more efficient way to remove heat. So you actually get a two-phase, a phase change that takes place with the refrigerant. As it sees that heat and pulls that heat away from the chip, it actually flashes to a vapor as a way to, again, remove that heat. After that, what's on the horizon is immersion. And so this is where the chip is actually emerged in a fluid. A lot of infrastructure costs associated with that. So I'm not sure if it'll really catch on as much as two-phase directed chip, but in an even more efficient way to remove heat.

- All right. So Scott, you have been in the cooling industry for quite a long time. You've been in the data center industry for five years, which today is kind of equivalent to 50 at the pace that things have been going. Are there any cool projects that you've worked on or are working on that you feel is really pushing the boundaries of what is possible?

- Yeah, there's a couple that we've been actively working on. Data centers have gotten larger, and these customers of ours, they wanna simplify their infrastructure if they can. So they want larger and larger equipment from us. And we recently announced a three megawatt, or about 850 ton air cooled chiller as an example. Prior to that, kind of the industry norm was closer to two megawatts. So truly, you know, transformational. We've also have one of the largest, if not the largest water cool chiller in the industry at almost 18 to 20 megawatts. And then lastly, CDUs, which are used for direct to chip, we're also seeing the same thing happening. So one megawatt was the kind of the industry standard. We've seen that start to grow. We recently announced a two and a half megawatt that is scalable up to 10 megawatts. And so all of this again, is designed to match that computing density. The size of the data hall is getting bigger, that overall size getting bigger.

- So I'm curious to hear from you, Scott, if you think that there are potentially other industries, other types of building that are gonna benefit from all this innovation that has been created, data centers. Do you see any sort of technology that can be transferred?

- Dominique, there's two things that come to my mind when you ask that question. The first is probably around controls. So controls and specifically autonomous controls. How do you operate and control your equipment, your building, your data hall most efficiently? This isn't necessarily something that started in data centers, but I think data centers are gonna push that technology further and further. And the reason being, again, anything savings, any power savings, energy efficiency savings can be converted back to IT and computational effort. The other technology that I'll touch on just quickly is that of thermal storage. Thermal storage, there's lots of techniques for this, but the idea of hey, how do I ride my way through peaks and valleys of heat and energy rejection? And something, again, it's been around for a while, we're seeing more and more applications for it in data centers. Again, we're gonna push it, it's not just gonna be using ice, but there'll be other phase change materials. Again, we will likely find its way back into comfort cooling.

- Scott Smith gave us a clear picture of the scale of the challenge, data centers growing at breakneck speed and the pressure that that puts on cooling. Our next guest has dedicated his career to this very problem. Dr. Dereje Agonafer is a presidential distinguished Professor of Mechanical and Aerospace Engineering at the University of Texas at Arlington. He's also one of the pioneers of data center cooling research. After 15 years at IBM leading thermal innovations for computer systems, he moved into the academic world where he's now training in new generation of engineers and has driven breakthroughs in chip cooling and thermal management. To kick off today's interview, I asked him to give me the cold hard facts. How big is our challenge around AI chips?

- So these AI chips are, or accelerators are made out of GPUs, graphical processing units, and they consist of thousands of cores. You know, thousands of cores. There's cores, very energy efficient, but also power hungry. So let me give you a number. The Blackwell, you've heard about it. Big news, the Nvidia Blackwell. 2024 to 2025, it's about 1,200 watts, you know, teaches you, right? 1.2 kilowatt.

- In a single chip?

- Yes, yes.

- Wow!

- And then in 2026, '27, expected to be about 1,800. And then fast forward to 2030, we're talking about 2,000 watts.

- Generally, how much would a rack have? How many chips are we looking at per rack?

- A flagship GPU racks, right? This is the kind of AI racks, whatever you wanna call them, right? They rose from 2020, 40 kilowatts. 40 kilowatts air cool. Absolutely air cool. Now, the Blackwell system projection is 600 kilowatts. However, very soon we are going to be talking about one megawatt. We're really talking about liquid cooling. What kind of liquid cooling we can discuss that.

- That's a perfect segue for what I wanted to ask you. What are the most promising approaches to cooling down these chips sustainably and efficiently?

- First, let me give you some scary numbers. The State of Virginia, and the State of Virginia is sort of like the data center of the world. 26% of the electricity used in the State of Virginia is by data centers. Fast forward to 2030, is projected to be 46%.

- Almost half.

- Yeah, it's almost half. Yes, exactly. So now let's talk about State of Texas currently is about 4.5%. It's probably gonna be between 10 and 12%. So there's gonna be significant increase. So energy efficiency is absolutely and totally critical.

- I think it's very clear, and you pointed it out, right? That the traditional way of doing it is not gonna be sustainable.

- To put in perspective, if you use, we call it direct evaporative cooling. This is really what Facebook was using in Print Bill Oregon and so on. To cool one megawatts a rack using this kind of technology, it requires 6.6 million gallons of water per year, right? So it's very, very both water and power intensive. So, so we are now moving to liquid cooling. You have different types of liquid cooling technologies. So we have what we call direct to chip cooling, or cold plate technology. So in this particular case, you mount it on top of this high power devices, it takes the heat just from that region. About 80% of the power is really on this high power devices, the CPUs and GPUs. So you use cold plates to do that and maybe air cooling for the rest of the system. So there's a cold plate that it's called direct to chip. My view is that's gonna be around for a while. Why I say that is there's a lot of opportunity to improve.

- Now, you do a lot of groundbreaking research, right? What do you see as the biggest holes or maybe gaps in the current cooling technology that innovation still needs to solve for?

- At the end of the day, we have a system. We have a server where we put all these fancy components in there and then we have manifolds that take it to the rack and then there's chillers. So those CDUs, cooling distribution units there really, which kind of takes that heat away from the rack, there's a lot of work in there, a lot of innovation. Currently most folks have 200 KW, 200 kilowatt racks, but a lot of people right now are working one, 1.5 and higher this megawatt. So CDUs are a challenge. We have commissioned over 15 CDUs in our lab. The traditional approach academic research is really do something very fundamental, very basic, right? Maybe how can I improve, how can I come up with better micro-channel cold plates? How do I make maybe the hydraulic diameter smaller and so on? Those days are really gone or should be gone. What's need to happen is we in academia need to go to a higher technology readiness level. We happen to have eight racks. Each rack is 25 KW, but we've been able to do all kind of tests and luckily be able to commission the whole liquid cooling process. Not just cold plates, we talk about CDUs, you know, and so on and so forth. So we need to broaden that up. The relationship between academia and industry, it needs to be more of a partnership role.

- So if I kind of pull it back, right? To how do we keep pushing the envelope on what's possible for data center cooling. The two things that I've sort of heard you say is, one, you know, innovation at a component level is gonna keep happening, right? And that's great. What we need to see even more of is innovation at a system level. So more integration, and you can only do that if there is closer collaboration between industry and academia, right? And it's not just the chip maker, it's the chiller manufacturers as well. And it's really everyone working together in this close ecosystem to push the boundaries of what's possible.

- Absolutely. First, I don't wanna discount the component level. We are working on a two-phase technology we're very excited about. It's called direct to chip evaporative cooling. There is funding we received, about $2.84 million from ARPA-E, is a cooler chips program. So my team is leading it, but we collaborating with the University of Maryland College Park, University of Illinois Urbana-Champaign and Illinois Institute of Technology. And we have a great partner, ex-IBM-er, who's a consultant, Dr. Roger Schmidt. So we are working on that. We are very excited about it. We think we can reach far higher power density. It's not really just the power. If someone tells you I'm cooling this much power, it's really vague. They better tell you how much area.

- Now, again, we keep talking about more power, right? And less space. So I wanna pick your brain on another topic, which is, we've been talking about cooling, right? Which is essentially removing heat will allow these chips to keep operating. That heat has to go somewhere, right?

- Yeah.

- Because energy cannot be destroyed. It can only be transferred.

- Almost 100% of it.

- So what role do you see heat recovery playing in the future of thermal management for data center?

- Actually that's gonna be a big part of our new lab. You know, we really talk about heat recovery, taking that heat. See, in thermodynamics we have this thing called exergy. So if you can have the temperature, the coolant coming out at a very high temperature, then relative to room temperature, you have a lot of exergy, so where you can extract whatever you want. You know, power, you can use that to generate electricity. We are building a new lab, which I think is gonna be probably one of the biggest for academia in the nation. It's about 9,600 square feet, and we're planned to have between six to eight megawatts of power. With that, there are many, many companies we're talking to that are interested in coming and having some of that real estate. CDUs, I told you, we've been helping commissions. They're very, very important things, cooling distribution units. But now we can move to stuff like chillers. And so we can be a really, a fair and unbiased place to test these units. And some companies are even thinking about making some interesting stuff like thermal energy storage systems. Very, very important when we talk about this sustainability, right?

- Can you talk me through, you know, thermal energy systems? Why is this an important innovation for data centers? Can you talk us through the thinking there a little bit?

- Different people do use in different ways, but use sort of face change materials which change the state either to liquid or solid. And so you are storing energy and use it as needed. So you can significantly decrease the requirement for, you know, the chillers for example, right? Instead of having 1,000 ton chiller, you might end up needing 800 ton chiller. You can be talk about being a lot more energy-efficient. So there's a lot of work in energy efficiency. The other thing is that people are doing a great job and my colleagues at IBM and Switzerland and elsewhere in Europe, utilizing waste heat from data centers to heat local homes and so on and so forth, right? Heat reuse is going to be a very important component in our new lab.

- I think we've got a lot of exciting things ahead of us. Can I ask you one last question, Dr. Agonafer? I know you're very, very busy, but I'm curious to know, what keeps you up at night in terms of challenges, things that you're not sure if we're really gonna solve? And on the flip side, what gets you out of bed in the morning? So so what are you most excited about when you think about future prospects for this industry?

- I think that the opportunities for liquid cooling, it just started by the way, just in the last three years or so. Look, it's just now growing exponentially, right? I think it's important to keep in mind is the technology we're utilizing, is this sustainable? Right? That is something I think about. In fact, when I teach thermo, we have a section, 25% of the grades that are on renewable energy. So they do a special projects. You know, it could be, you know, solar, whatever it is, and come up with some ideas what they would like to do. So yes, I think the current direction of power increase is crazy. We can't just look at those charts and just say, you know what? We're just gonna have to accept it. But on the other hand, liquid cooling is just starting. So it's very exciting to be involved in it. You asked me what keeps me up? I don't stay up that late, but I'm, at 5:00 a.m. I'm up in the morning. And I'm always excited. I'm not kidding you. Probably way too much. You know. I keep saying, industry interaction is critical. One other example in this RPE Project, we work with a company called Excelsius, who have a two-phase, 250 KW CDU equivalent distribution unit. Without them, we couldn't do it. I also want to acknowledge in this new technology that we're developing, for example, two phase, Trane has been really instrumental. They donated two 50 ton chillers worth over $600,000, brand new. Without that, we're not able to scale up what we do right now. We need to really have a better dialogue between academia and the industry, and that's really the way I think our nation can, you know, succeed.

- I couldn't agree more. And I'd love to hear from our listeners, what innovations in cooling or energy efficiency are you most excited about, and do you think that could change the way that your facilities operate? Leave us a comment and let us know.

- And that's it for this time. This has been the "Healthy Spaces Podcast" with me, Dominique Silva and my wonderful co-host Scott Tew. If you want to know more about the topics covered today, you'll find all the links in the show notes. We're back in two weeks with another episode, so be sure to like and subscribe so that you don't miss out. Thank you for joining. We'll see you next time.

Featured in this Episode:

Hosts:

-

Marketing Leader EMEA, Trane Technologies

-

Global Head and VP, Sustainability Strategy, Trane Technologies

Guests:

-

Director of Mission Critical, Trane

-

Presidential Distinguished Professor in the Department of Mechanical and Aerospace Engineering, University of Texas at Arlington

About Healthy Spaces

Healthy Spaces is a podcast by Trane Technologies where experts and disruptors explore how climate technology and innovation are transforming the spaces where we live, work, learn and play.

This season, hosts Dominique Silva and Scott Tew bring a fresh batch of uplifting stories, featuring inspiring people who are overcoming challenges to drive positive change across multiple industries. We’ll discover how technology and AI can drive business growth, and help the planet breathe a little bit easier.

Listen and subscribe to Healthy Spaces on your favorite podcast platforms.

How are you making an impact? What sustainable innovation do you think will change the world?

Topic Tags

English

English